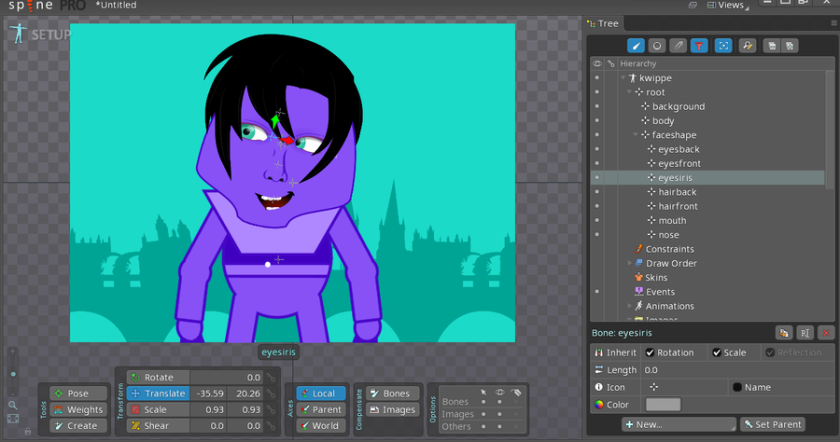

The future of my vector character generator may very well be as an artwork generator for developers looking to quickly create beautiful 2D art, with 1 click export to their favorite animation software. Here is an example I did for Spine.

Kwippe can generate all sorts of random combinations from any of the 4 character sets (man, woman, monster, and alien) – and even interchange parts by importing a character into another dataset. I do that a lot with monster eyes 🙂 – From there switching out eyes, mouths, bodies, and other features is a matter of 1 click – with easy controls to move around, recolor, scale and stretch the different pieces individually. It’s nice not to have to worry about locking all of the layers you DON’T want to edit – which I’m always doing in Illustrator! Kwippe is a bit like an Illustrator for people who want to use fun presets and quick editing tools, rather than the ability to hand craft each path. Then with just 1 click – all of the artwork and necessary JSON is created for Esoteric’s Spine animation software.

I preserve the head and body groups separately, as well as any text, props, shapes, or background layer. They are all ready to go to begin animating without needing to do anything else in Spine other than have some imagination, which this scene below definitely lacks! 🙂

Since Kwippe allows you to save any scene to the Gallery – you can use it to make another animation later, and if you just want to add alternate parts like different eyes, hair, or background – you can just import those parts to your existing project, and everything else will work right out of the box. Since the images are trimmed – they don’t need any other manual placement to serve as skins to existing slots/bones.

I added a few other cool features: the ability to set any scale you want, as well as the ability to output JSON w/out images (faster if you are using the same parts and just want to update positions). The text and banner creation deserves its own blog post, as there are way too many features to go over quickly.

I’d love to share this project with other developers – so my husband (and dev partner, he’s the “idea” guy 🙂 – and I are looking at possibly doing a crowdfunding thing to help grow this app a bit and get a little outside help. If you are interested in helping or hearing more, give us a shout out!